AWS Simplifies AI App Development, DeploymentAWS Simplifies AI App Development, Deployment

The giant cloud provider unveils its Deep Learning Containers at its Summit event.

March 27, 2019

Amazon Web Services is offering a containerized service to make it easier for enterprises to get their AI-based applications deployed and running in the public cloud.

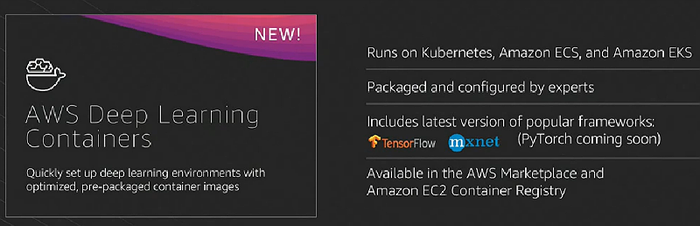

At the company’s AWS Summit this week, Matt Wood, general manager of deep learning and AI at AWS, introduced AWS Deep Learning Containers, a collection of Docker images that come with popular deep-learning frameworks for training and inference, initially Google’s TensorFlow and Apache MXNet. More frameworks, including PyTorch, will follow, Wood said from the stage at the event in Santa Clara, California.

The idea is to remove some of the complexity involved with developing AI- and deep-learning-based applications and then deploying them into the cloud.

“We’ve done all the hard work of building, compiling, generating, configuring [and] optimizing all of these frameworks,” Wood said during the first day of the AWS Summit, which had 6,500 attendees. “That means that you do less of the undifferentiated heavy lifting of installing these very complicated frameworks and then maintaining them.”

The images in the AWS Deep Learning Containers are preconfigured and validated by AWS and can leverage a broad array of AWS services, including Amazon Elastic Container Service (ECS), a managed container service; Amazon Elastic Container Service for Kubernetes (EKS), which is a managed service for Kubernetes,;and Amazon Elastic Compute Cloud (EC2).

“We built these containers after our customers told us that they are using Amazon EKS and ECS to deploy their TensorFlow workloads to the cloud, and asked us to make that task as simple and straightforward as possible,” AWS chief evangelist Jeff Barr wrote in a blog. “While we were at it, we optimized the images for use on AWS with the goal of reducing training time and increasing inferencing performance.”

Over the past few years, AI, machine learning and deep learning have become key focuses of cloud providers like AWS, Microsoft Azure, Google Cloud Platform and IBM Cloud. Organizations increasingly are leveraging the technologies to drive efficiencies, reduce costs and make better business decisions. They see public cloud infrastructures and services as avenues for more easily deploying and running them. In a report on cloud computing released this week by cloud workload automation specialist Turbonomic, more than half of organizations surveyed are adopting AI and machine learning in their applications and 45 percent are adopting the technologies for application management.

Over the past few years, AI, machine learning and deep learning have become key focuses of cloud providers like AWS, Microsoft Azure, Google Cloud Platform and IBM Cloud. Organizations increasingly are leveraging the technologies to drive efficiencies, reduce costs and make better business decisions. They see public cloud infrastructures and services as avenues for more easily deploying and running them. In a report on cloud computing released this week by cloud workload automation specialist Turbonomic, more than half of organizations surveyed are adopting AI and machine learning in their applications and 45 percent are adopting the technologies for application management.

In addition, 43 percent of organizations are adopting AIOps this year, compared to 32 percent in 2018, according to the survey’s authors.

“You’re starting to see tens of thousands of companies starting to apply machine learning to [address] core challenges,” AWS’ Wood said. “Machine learning has arrived in virtually every industry.”

The introduction of the AWS Deep Learning Containers is the latest step by the world’s largest public cloud provider to grow its AI and machine-learning capabilities. The company in November unveiled its Inferentia machine-learning inference chip, a move that followed the example of Google – which has had it Tensor Processing Unit (TPU) since 2016 – and Chinese e-commerce giant Alibaba, which also is developing its own AI-focused processor.

In addition, AWS offers the Elastic Inference Engine service to enable customers to leverage GPUs to accelerate inference in an EC2 or SageMaker instance. In another blog this week, Shashank Prasanna, an AI and machine-learning technical evangelist at AWS, outlined steps organizations can take to use AWS Spot Instances to run …

… deep-learning training jobs relatively inexpensively. AWS also outlined how organizations can boost performance and cost savings when using Amazon EIastic Inference with MXNet for improved inference.

The AWS Deep Learning Containers are available now for free in the AWS Marketplace an Elastic Container Registry.

They were among a number of announcements AWS made during the Summit. Others included:

The general availability of the AWS App Mesh for efficiently routing and monitoring traffic in AWS services – including Fargate, EC2, ECS and EKS – giving organizations better visibility and insight into traffic issues and the ability to re-route traffic as needed.

Concurrency Scaling for the Amazon RedShift data warehouse, enabling enterprises to quickly add more query processing power as needed to ensure consistent performance when concurrent queries in workload rapidly grow. Customers pay for the extra processing power they use and the power is removed when it’s no longer needed.

The addition of Glacier Deep Archive storage to give organizations an option to move off-premises tape archival services for long-term storage of large amounts of data. Pricing on the new storage class rivals that of the tape services; the data is stored across three or more AWS availability zones and can be retrieved in less than 12 hours.

AWS is adding to the instances powered by AMD’s Epyc server chips. The cloud provider last year unveiled the M5a and R5a EC2 instances, which were built on its Nitro System and cost 10 percent less than comparable EC2 instances. At Summit, they introduced the M5ad and R5ad, both powered by AMD’s Epyc 7000 series and also built on Nitro, adding block storage to the M5a and R5a instances, according to AWS’ Barr.

About the Author

You May Also Like