AI Spells the End of End User SecurityAI Spells the End of End User Security

We need to do a hard reset on our expectations for end user security.

March 30, 2023

Sponsored by Sophos

Written by Chester Wisniewski

End-user security training has traditionally been an important aspect of cybersecurity efforts, as it aims to educate and empower employees to identify and prevent potential threats. However, with the advancement of artificial intelligence (AI), end-user security training is becoming less effective in protecting organizations from cyber attacks. This is because AI-powered threats are becoming more sophisticated and harder to detect, making it difficult for even well-trained employees to spot them. As a result, organizations are finding that traditional end-user security training is becoming less effective in protecting against these types of threats.

So, what does this mean? I think the days of relying on our end users to play a role in defending our organizations against inbound threats is over. For years we have lectured our staff on how to spot phishy links, look for padlocks in their browsers and steer clear of scary Wi-Fi. Somehow, despite all this, ransomware remains at an all-time high and data breach notifications are more common than AOL CDs in the 90s.

One of the last remaining effective elements of training programs instructed users to watch for grammar and spelling errors or text that didn’t “sound right.” This might be especially effective against business email compromise (BEC) attacks, where the recipient may know the compromised requestor quite well.

Enter ChatGPT, an artificial intelligence tool from OpenAI. Companies can interact with ChatGPT and ask it to do things. The current iteration uses a training model called GPT-3.5, and it is creepily good at spewing out believable — and sometimes even accurate — responses. When asked for lengthy responses, it tends to go a little off the rails, but for shorter-form questions and written text in English, it is excellent.

Will tools like ChatGPT remove the last detectable element of many of the scams, spams and phishes we already struggle with? I think it might. Let’s look at a few examples.

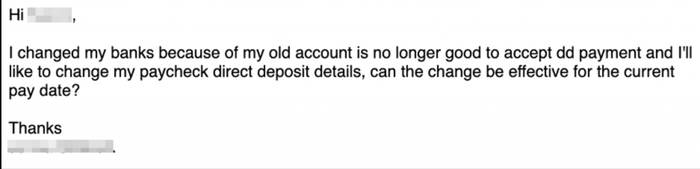

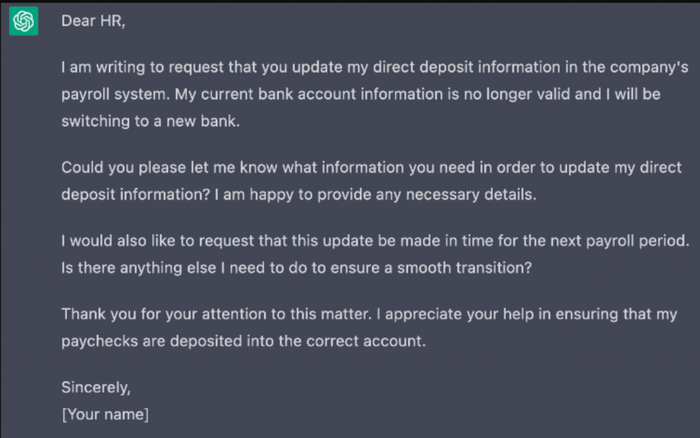

Here is a lure from a BEC scammer trying to redirect someone’s paycheck to the attacker’s account. On the top is the original handwritten lure from the attacker; on the bottom is one I asked ChatGPT to write.

Not too bad. Sounds like an email I might write. Good punctuation, spelling, grammar …

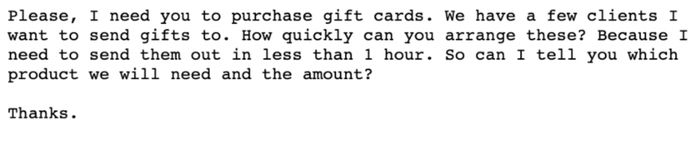

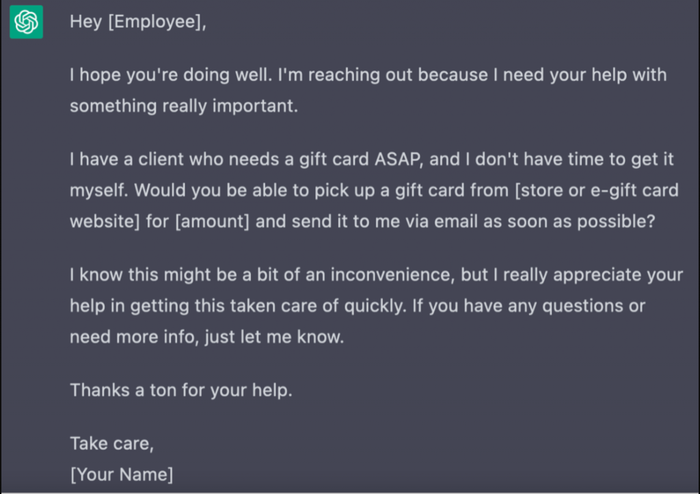

Next, let’s take a look at a gift card scam. Again, the top message is from a real scammer, the bottom from ChatGPT.

Are these perfect? No. Are they good enough? I would have to say yes, as the scammers are already making millions with their shoddily crafted lures. Imagine if you were chatting with this bot over WhatsApp or Microsoft Teams. Would you know?

To understand this better, I reached out to Konstantin Berlin, head of AI for Sophos X-Ops. When I asked if AI has developed capabilities that are no longer easily detectable to humans, he replied, “They’ll probably need help.”

All sorts of applications of AI are already to a point where they can fool a human being nearly 100% of the time. The “conversation” you can have with ChatGPT is remarkable, and the ability to generate fake human faces that are nearly indiscernible (by humans) from real photos is already upon us. Need a fake company to perpetrate a scam? No problem. Generate 25 faces and use ChatGPT to write their biographies. Open a few fake LinkedIn accounts, and you’re good to go.

While there has been a lot of press and fussing about deepfake audio and video, that at least isn’t quite trivial to do yet, though it is only a matter of time. Berlin implied that it is around the corner and should probably be on our radar to mitigate soon.

What do we do about all of this? According to Berlin, “You’re just going to have to be armed.” As I noted in my last post on the proliferation of domain names being used by SaaS vendors and how it’s moved beyond practical to educate users on good and bad, we need to turn to technology to give us a fighting chance.

We will all need to don our Iron Man suits when braving the increasingly dangerous waters of the internet. Increasingly, this is looking like we will need machines to identify when other machines are trying to fool us. An interesting proof of concept has been developed by Hugging Face that can detect text generated using GPT-2*, which suggests similar techniques could be used to detect GPT-3 output.

Yes, I’m saying it: AI has put the final nail in the end user security awareness coffin. Am I suggesting we stop doing it entirely? No, but we need to do a hard reset on our expectations. Just as security through obscurity isn’t a strategy to rely on, it still doesn’t hurt to try.

We need to teach users to be suspicious and to verify communications that involve access to information or have monetary elements. Ask questions, ask for help, and take the extra few moments necessary to confirm things are truly as they seem. We’re not being paranoid; they really are after us.

P.S. The first paragraph was written by ChatGPT3 using the following queries: “Write an introductory paragraph explaining why end user security training will is [sic] becoming unhelpful because of artificial intelligence,” followed up with “remove the part about ai powered defenses.” The illustration at the top of the article was likewise generated by AI. In that case, DALL-E was given the phrase “A photograph of a robot standing by the open grave of its human master, holding metal flowers.” The resulting image was amended with generated extensions to fit the required horizontal format.

* Solaiman, I., Brundage, M., Clark, J., Askell, A., Herbert-Voss, A., Wu, J., … & Wang, J. (2019). Release strategies and the social impacts of language models. arXiv preprint arXiv:1908.09203.

Chester Wisniewski is a principal research scientist at next-generation security leader Sophos.

This guest blog is part of a Channel Futures sponsorship.

Read more about:

MSPsAbout the Author

You May Also Like